Curious about applying machine learning to solve industry concerns? Here, I demonstrate how I leveraged my data science knowledge to develop an AI solution for a real company.

In this post, we will discuss the various stages of the project to understand our starting point and end goal. For the purposes and readability of this post, we will focus on the main aspects of developing the AI and not delve into technical details about data science, nor data engineering.

In case you feel the urge to get to know more about this project, I really encourage you to contact me directly.

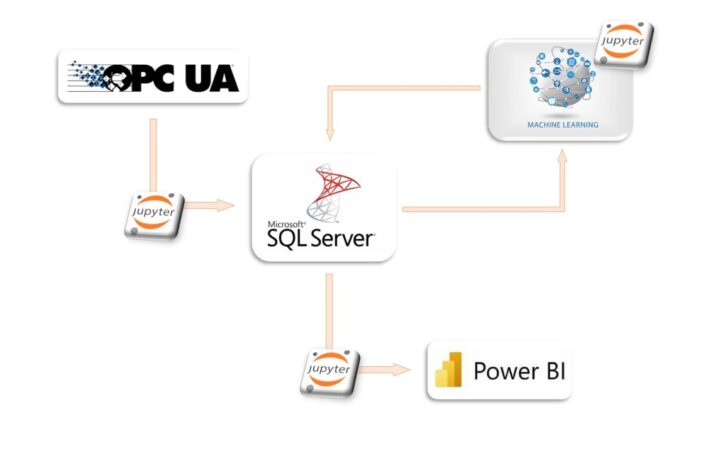

ARCHITECTURE

To develop our machine learning algorithm, it is essential to analyze all available tools that may help us reach our goals:

OPC UA SERVER

Production data repository, which functions as a database. This allows for easy extraction of historical data to process according to our needs, making it a perfect data source.

JUPYTER

Programming environment where we will train and deploy our AI forecaster. Python will be used as the main programming language for machine learning purposes.

SQL SERVER

Programming environment where we will train and deploy our AI forecaster. Python will be used as the main programming language for machine learning purposes.

POWER BI

Data visualizer tool which will help us connect the world of big data with the daily company activities, making every step achieved visible to everyone at any moment.

DATA PIPELINE

By putting together all the pieces of the puzzle, we can create a data pipeline, which can be summarized in the following outline:

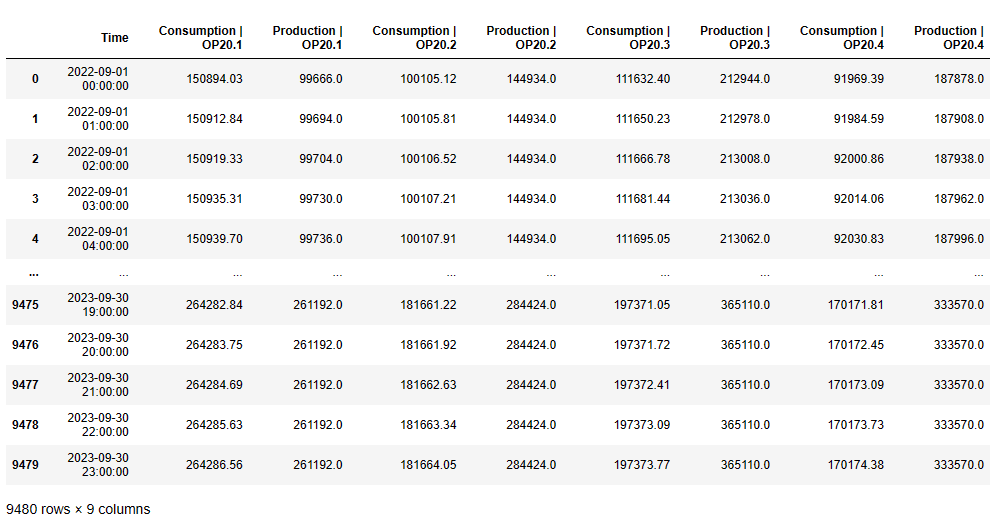

DATASET

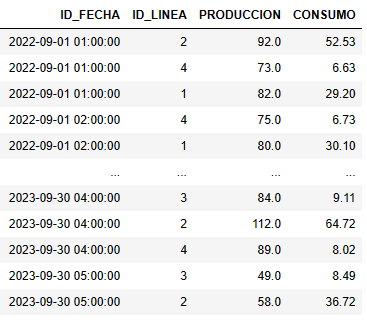

To enhance comprehension, below is an example of the dataset’s format and size, where we can highlight:

AI FORECASTER

We must take into account how critical the training stage of our algorithm is. Remember:

"Teach them how to think, not what to think."

This quote reflects the main goal when training an AI. Most of the time, people tend to focus in the train data excessively, ending up in what is called overtraining of the model, which means the system is biased and will not give back reliable forecasts when facing new sets of data.

For this reason, a deep data processing and a good training program is needed.

This is how our dataset looks like right before training the ML algorithm, where:

Describes the time frame where the data was collected.

Identify the affected machine.

Production during this specific time frame.

Energy consumption during this specific time frame.

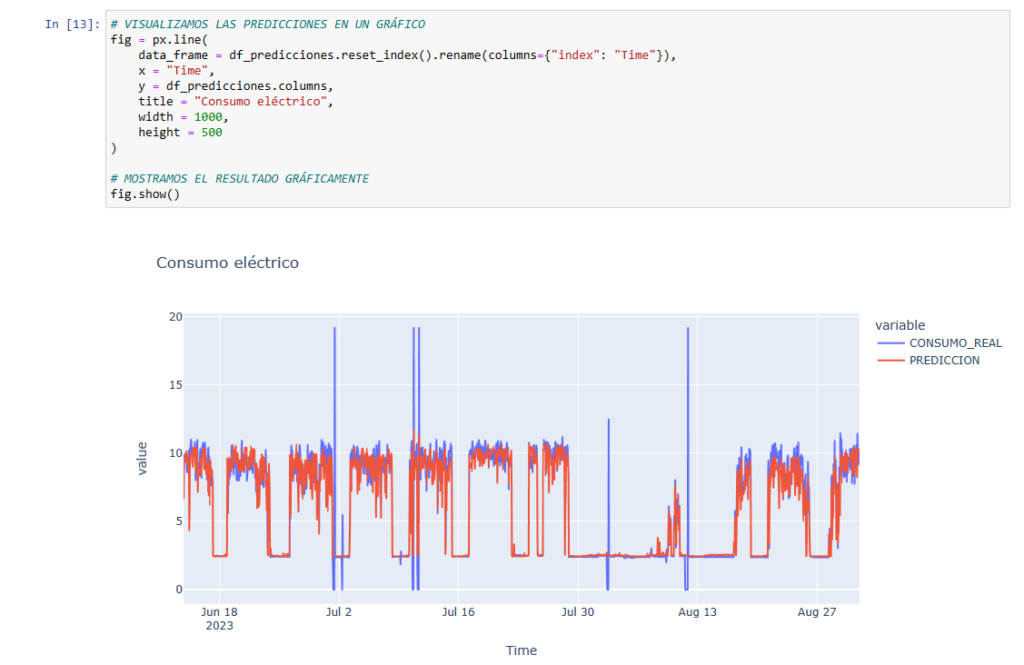

And below we see the output of our AI after training stage, where we compare real consumption data against AI predictions:

Reaching this point, we should ask ourselves:

Is it enough?

And the answer should always be no. As a data scientist, it is our responsibility to calculate and contrast how good this is: Never ever rely on a image, but in numbers!

However, it is not the purpose of this post to get into it.

DATA VISUALIZATION

This final step may not be the most challenging, but it holds a significant truth:

"A picture is worth a thousand words."

The most effective approach to incorporating big data into your company is to provide a clear demonstration of its capabilities and establish a user-friendly management system that enables employees to interact with the data and achieve their objectives.

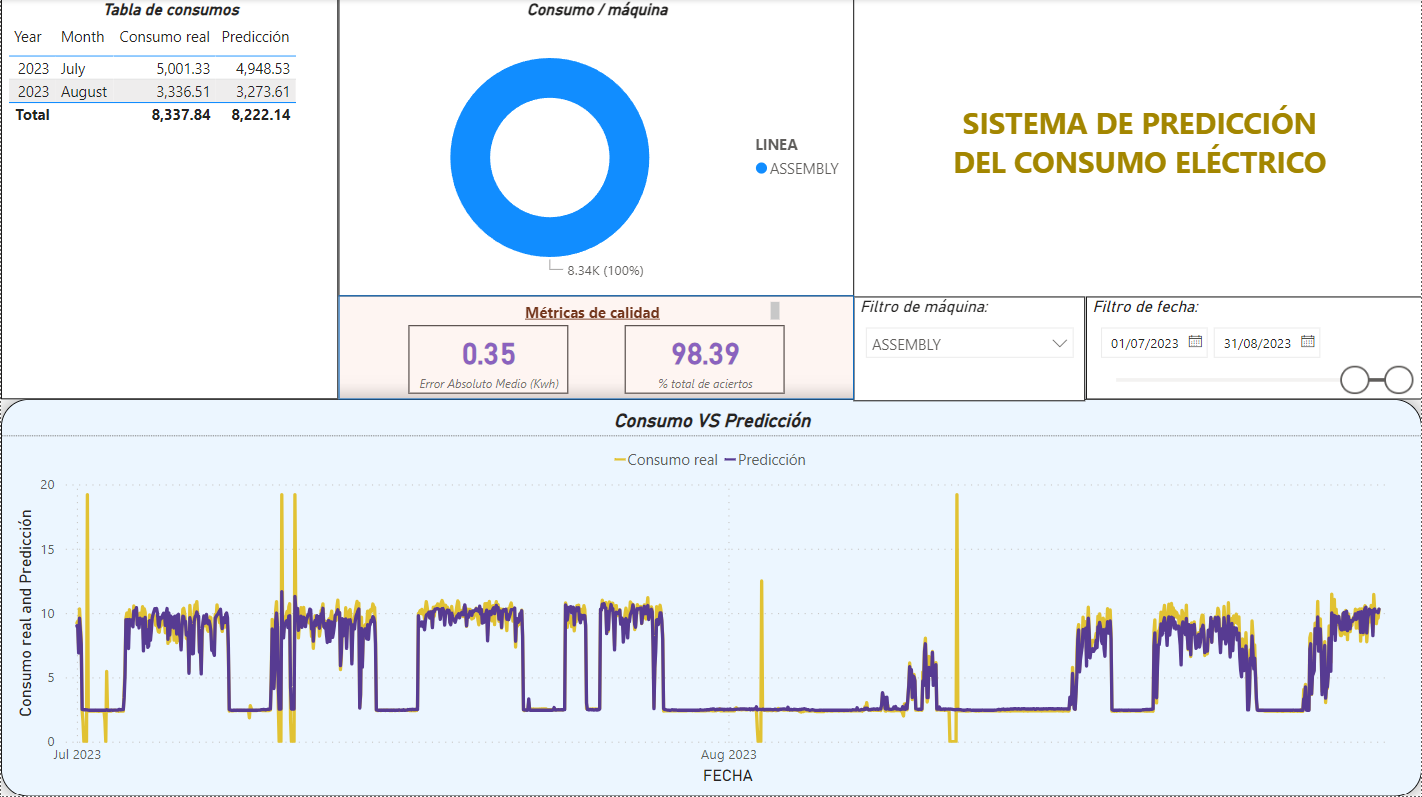

By creating the following Power BI interactive screen, we share some important insights:

- Improve your understanding of machine behaviour by tracking monthly energy consumption.

- Check the main quality metrics of the ML model.

- Easily track the energy consumption forecast, and compare it with real-life.

Big J Insights

This is a brief introduction to my AI project. I hope you find it inspiring and can´t wait for you to start your own!